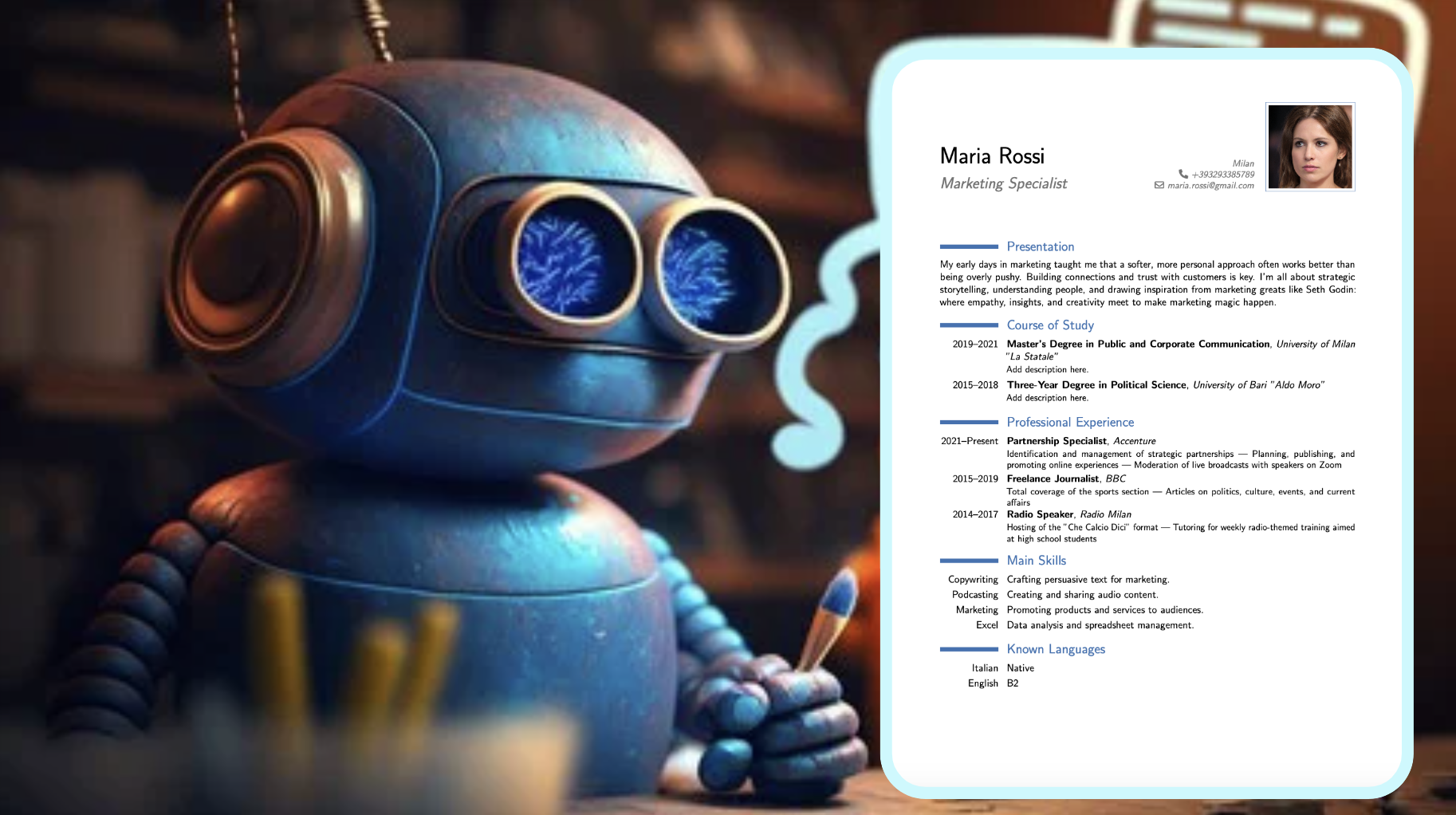

Write your professional CV in LaTeX with ChatGPT in 4 minutes!!!

Are you eager to dive headfirst into the world of work, but find yourself lacking a polished, professional Curriculum Vitae? Fret not, for today, we embark on a journey to craft a meticulously structured CV in a matter of minutes, guided by the expert hand of ChatGPT. Wondering what sets the stage for my confidence?

The blueprint has been meticulously curated by a seasoned HR professional boasting over 25 years of industry wisdom. Enter LaTeX, the unrivaled champion in elegantly formatting your documents, revered in the scientific realm as the hallmark of professionalism. You needn’t be well-versed in these intricacies, as my prompt and ChatGPT stand ready to handle every detail, ensuring your CV shines brilliantly.

Step by step:

- Ask ChatGPT with the correct prompt

- Compile the LaTeX code

- Candidate for your next 300k job

Let’s get started without any further delay!